Maximizing Intellectual Capital through Systematic Combinatorial Discovery

“We are building a machine that can identify opportunities, adapt infrastructure, and roll out products at full speed” - Richard Fairbank, Capital One CEO

It’s now common knowledge that 90% of venture-backed startups will fail to return capital to investors. We very often discuss this as a law of startups akin to Moore’s Law and chalk up the failures to the uncertainty and ambiguity of the market. But what if these this failure rate is replicable only because we’ve accepted it as such?

This traditional startup model is highly inefficient and inherently (and unnecessarily) risky. Startups fail not simply because of poor execution (if at all) but because they fail to build the right solution before running out of capital. Building the wrong solution for the given problem wastes tremendous resources - capital yes, but also time and opportunity. And this error stems from an easily identifiable but rarely discussed quirk of the traditional model - we far too rarely explore different potential solutions to the given problem. Current approaches celebrate conviction based (mostly) on founder confidence, but premature commitment to a single solution - falling in love with the solution not the problem - fundamentally and systematically eliminates potential breakthroughs.

The solution is obvious when framed in this way, of course - rather than commit ourselves to a single solution to the given problem which is highly unlikely to actually work, and waste months or years of time, sweat, and capital, we should choose a different model - systematic exploration of many potential solutions guided by empirical validation before committing to any singular direction.

Thomas Edison proclaiming he found “1000 ways to not build a light bulb” wasn’t merely a pithy remark but a keen insight into how breakthrough innovations emerge. His lab’s systematic exploration of filament materials, bulb designs, and manufacturing processes yielded not just the breakthrough illumination but a knowledge archive about these components. Four decades later, Bell Labs’ Harold Arnold channeled the same rigorous approach to transform the more limited audion into the world-changing vacuum tube, exploring and recombining components until not one but fifteen distinct variants emerged.

Maximizing our intellectual capital requires systematic combinatorial discovery, the core feature of a system I’ve been developing called the Kensho Model. We can transform the journey from idea to scaled business through three interconnected components:

- Opportunity Mapping: Systematic exploration of unmet jobs to be done within a given domain, ensuring that no promising direction goes unexplored

- Solution Exploration: Systematic generation and evaluation of diverse solution variants for each opportunity

- Discovery Simulation: Feedback loops that continuously test our simulated expectations with reality.

By constructing a discovery-focused system from the start and applying to every problem we encounter, we can dramatically increase the success rate of launching breakthrough businesses without reducing ambition. We can pursue nonobvious or avoided opportunities that the market would miss. And by systematizing this approach, we can explicitly accumulate knowledge and validated primitives to improve this combinatorial discovery approach as we scale.

Mapping Opportunity Spaces

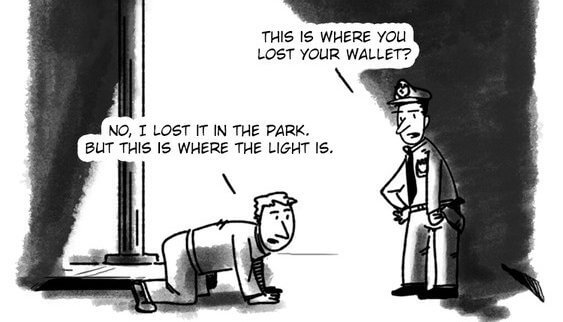

I’ve mentioned “opportunity spaces” several times now, but what are we actually referring to here? Every business domain, whether adtech or govtech or consumer products, contains numerous unmet needs that provide potential opportunities for us to solve with new products and businesses. Most companies explore only a tiny fraction of this space, most typically based on the founders’ experience or intuition - the streetlight effect manifested as strategy.

I’m not generally comfortable ambling around aimlessly in the dark and instead need an approach that enables the systematic exploration of entire landscapes for interesting and untapped opportunities.

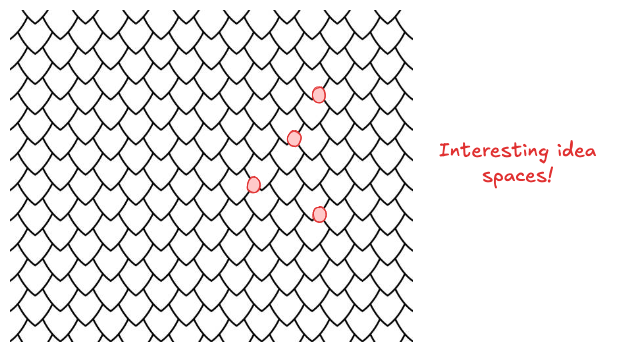

Breakthrough opportunities often exist in unexpected regions that traditional approaches miss, and especially at the intersection of (often siloed) disciplines. Network theorists call these structural holes, but I prefer Donald Campbell’s “Fish-Scale Model of Omniscience” in which breakthrough ideas often exist at the overlap of domains (our fish scales):

Traditional approaches might occasionally stumble upon these opportunities, but systematic exploration actively seeks them out - creating new possibilities for value creation that would be invisible to domain-specific exploration.

Opportunities Arise from Customer Needs

Every successful product and business does so by satisfying previously unmet customer needs. Said differently - opportunity mapping is literally the process by which we attempt to understand (potential) customer needs en masse. There are various methods and frameworks one could use for such an endeavor, but my preference is jobs to be done (JTBD). It’s clear, relatively straightforward, and crucially for us, can be systematically applied. This is not about defining customer segments - we’re not referring to SMBs or enterprise (these are sales strategies) - but about the underlying customer desires that, if met, would create substantial bidirectional value.

Ultimately we have three tasks to accomplish here:

- Identify and catalog all possible JTBDs within a domain at the present moment

- Map existing businesses against these JTBDs

- Assess how well each existing business is actually delivering against these JTBDs

And from this systematic, comprehensive mapping process, we reveal opportunity gaps - either poor delivery by existing businesses or total blue ocean opportunities.

One might question how exactly we might pull this off, and rightly so - this is a very abnormal process, and I’m not aware of any other companies attempting to carry it out. The internet itself - across text, audio, and video - offers ample information for us to aggregate, synthesize, and distill into usable forms, but a not insignificant volume of needs, wants, desires has likely never been documented for the given domain. Sometimes this is because information is proprietary and protected; other times because such needs have never been properly identified let alone articulated.

In these latter cases, then, we need to create new data if we are to complete our JTBD map. We need to talk, extensively, to customers across each landscape; not simply executives but people up and down the seniority stack; not simply one discipline, like sales, but all disciplines - engineering, finance, etc. And if we intend to speak to “everyone”, we’re faced with another challenge - how do we actually carry this out, rapidly and effectively? We need knowledgable humans, of course, with deep domain expertise and curious minds, but humans scale very poorly. So we supplement these expert researchers with teams of generative interviewers that we’ll call simulants - AIs that we tune specifically to extract useful information out of 1000s of humans quickly, cheaply, effectively, in parallel.

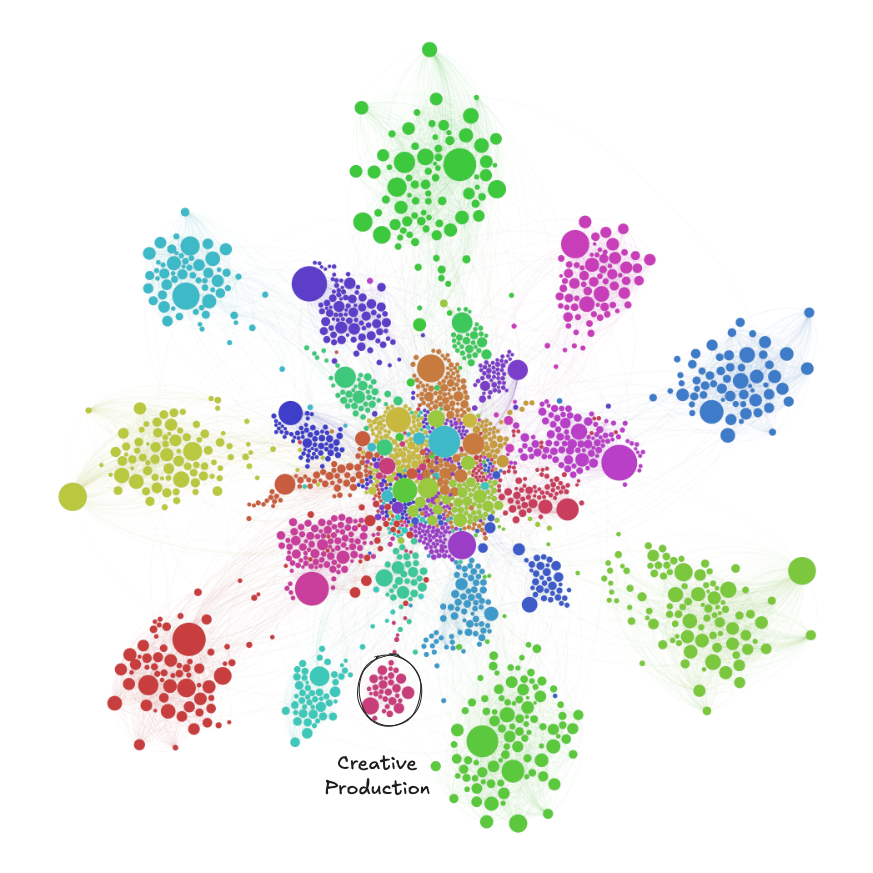

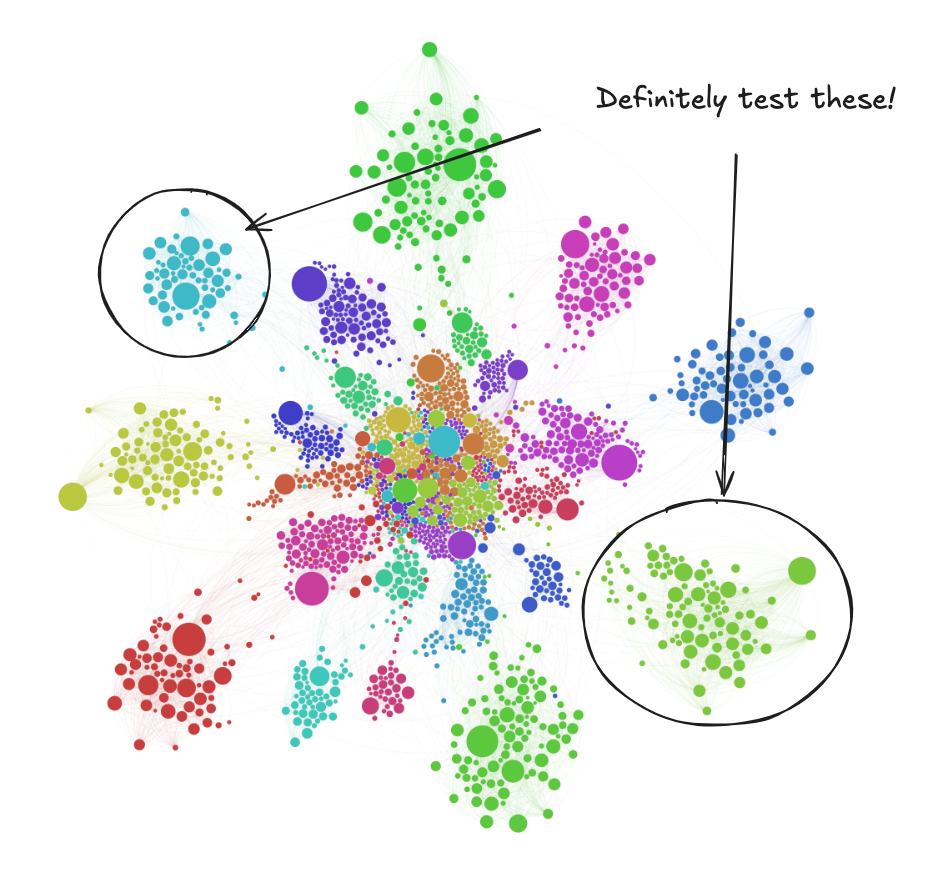

We’ve been talking about the approach generally, so let’s put some specifics against it. After synthesizing all publicly available data conducting 100s of (mostly) generative interviews, we might run a cluster analysis to better organize the 1000s of JTBDs that abound across the landscape, in this case focusing on Adtech (an area in which I’ve spent a good deal of time):

We can organize these JTBDs into various types of clusters: by type (monetization versus technical), by size (big versus not so big), and crucially, by how well current solutions actually deliver JTBD-curing solutions across multiple vectors:

- Effectiveness - How completely the solution addresses the core job

- Efficiency - How quickly and with what resources the job can be completed

- Satisfaction - Customer perception of the solution quality

- Adoption barriers - Psychological switching costs and resistance to change

- Market penetration - What percentage of potential users actually employ the solution

We’re particularly interested in uncontested opportunities of sufficient potential scale, of course, so this cluster here at the bottom is interesting:

Some of my collaborators have built and scaled both creative teams and technologies and know firsthand just how many jobs are not currently being done with available solutions today. Yes there is a distinct need for improved ad creative production tools, but because this area resides squarely within the GenAI streetlight, competitive ferocity here is high, and at least for now, we should expect great solutions to emerge, both from the major platforms (Meta, Google) and from independent players (Celtra and whomever eventually knocks them from their perch).

But within this cluster we see an interesting and mostly unexplored node, focused not on the creatives but the personnel behind the creatives. And more specifically, we see an unmet JTBD, voiced by Creative Directors:

Help me ensure consistent quality and brand alignment across all creative work produced by my team without requiring my continuous direct involvement in every piece.

Let’s be clear that GenAI craze or not, traditional approaches have heretofore failed to either identify this opportunity or offer sufficient solutions to satisfy this heavily weighted JTBD by Creative Directors. Given the scale of ad creative direction, we think that a winning solution or solutions could easily scale to $100M+ in annual revenue. We don’t really care whether the lack of current solutions in market is because creative production tools are always first in line or because every other entrepreneur or company passed on “workflow enhancement” for being too small a problem to solve.

Our analysis identifies it as a tasty opportunity pursue - but first we need to actually discover a proper solution that can deliver on this potential.

Exploring the vast sea of (potential) solutions

Let’s assume a startup chose to focus on this “Creative Director JTBD” opportunity, and rather than continue with such cumbersome vernacular, let’s call it Seurat. We can bet that the founders would do a bit of brainstorming, a bit of research, potentially (though not always) talk to a number of Creative Directors, and very quickly converge on a solution. They would build a prototype, raise a seed round, build out the full product and, with very little runway remaining, launch and hope for the best. It’s definitely possible that their solution immediately gains enough traction to garner a subsequent funding round that keeps them afloat for at least another 18 months, but as above, we know that the survival rate for startups at this point is just 10%. The law of large numbers is not working in their favor.

This is the standard, the default manner in which the 3000+ new startups each year attempt to hit it big. And almost without exception they will fail, and predictably, not because they don’t want it badly enough, not because they’re not qualified or even because they haven’t executed. It’s because they’ve built the wrong solution.

Let’s say, for example, that for Seurat we could construct an archive of potential solutions - not products, not businesses, just ideas. We know on principle that most of these will not work, so the question is - how do we actually find our way to at least one solution that can work? Herein we find the fundamental flaw for almost all startups today:

Without exploration, we prematurely converge on a single solution that is almost certainly incorrect, and pivoting in and around this initial solution won’t matter much if the actual solution lies in a completely different location within the distribution. When teams commit to specific implementations before systematic exploration, they cut off their optionality and dramatically reduce their chances of finding breakthrough value creation.

The 90% failure rate is not a law of innovation but a direct result of premature commitment to doomed solutions.

Even with extensive experience in the domain, we can never know with even modest certainty whether any given solution is an optimal fit for the opportunity. The rational decision isn't to follow our intuition or push our chips into the center, but to first ground ourselves in reality and then systematically explore the vast space of potential solutions before making any commitments. Said differently, we don’t have conviction in any given solution but in our ability to find one if it actually exists.

Systematic combinatorial discovery

At its core, systematic combinatorial discovery enables us to generate hundreds of diverse, high-quality solution concepts. It is both a system - a repeatable set of processes that we can repeat and improve on as we scale - and a platform - a set of underlying technologies that themselves will evolve as we improve. This last piece is crucial. The design of this entire approach is inspired by evolution - the most productive, open-ended algorithm that has ever existed, generating the hundreds of millions of species, the incredible biodiversity, that populates the world around us. Before we commit to a single product solution, even a product direction, we need to evolve hundreds of potential solutions as part of this systematic discovery process.

I’ve briefly outlined the “systematic” piece of this discovery process, but let’s turn our attention now to the “combinatorial” component. Every technology in history is composed of technologies that are further composed of technologies. That is, every piece of technology we encounter can be decomposed into a number of underlying primitives. Here’s how complexity theorist Brian Arthur describes it:

“Physically, a jet engine and a computer program are very different things. One is a set of material parts, the other a set of logical instructions. But each has the same structure. Each is an arrangement of connected building blocks that consists of a central assembly that carries out of a base principle, along with other assemblies or component systems that interact to support this”.

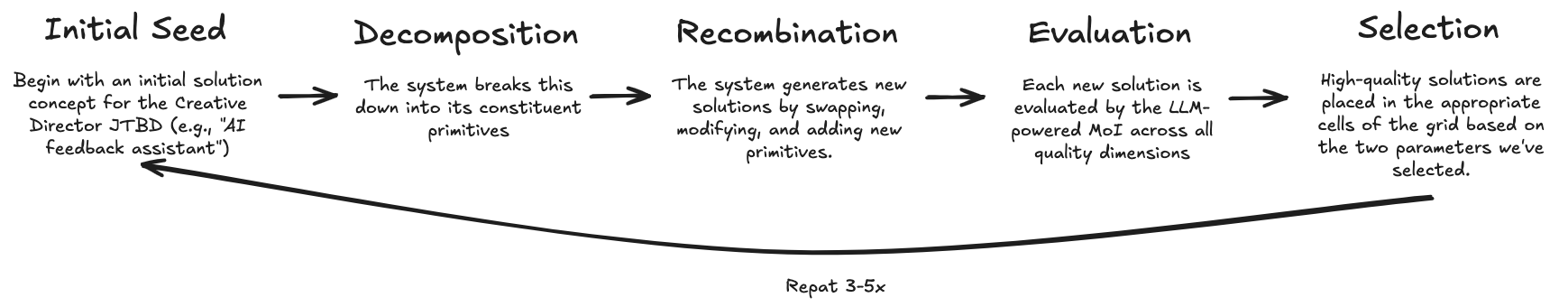

If we agree that all technologies are composed of primitives, and that these primitives are largely (though not entirely) generalizable across different types of technologies, then we can think of the process of creating new technologies as one of of primitive recombination - we are consistently and forever smashing different technologies together, both old and new, to create novel technologies. This brings us to the crux of systematic combinatorial discovery: we repeatedly and in predictable fashion decompose products into their core primitives and recombine these into wholly new arrangements in order to solve new problems.

Let's shift this from the abstract back to our exploration of Seurat. Our baseline observations revealed specific workarounds that Creative Directors have developed - impromptu feedback channels, screenshot collections with annotations, and ad-hoc style guides - each representing informal recombinations of existing tools to address their needs. These observed adaptations become valuable primitives in our solution portfolio.

Building on these real-world patterns, we want to generate dozens or hundreds of additional potential solutions that we can systematically test. But how do we actually generate these solutions at scale? We begin by decomposing traditional software products into a large primitive hierarchy that includes items such as:

We then can break down thousands of publicly available software products to create a product component archive - this is our starting point for recombination. But we still have this issue of systematizing the process. How do we recombine this primitives archive rapidly and at scale to actually provide us with what we really want - an archive composed not of components but actual product solutions for Seurat? Here we turn to another evolution-inspired set of technologies - quality-diversity (QD) algorithms.

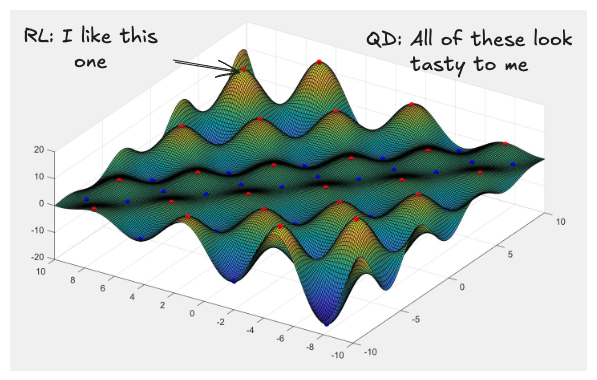

QD operates a bit like a specialized team of explorers interested not in finding a single mountain but charting the entire range.

The algorithms are interested in discovering the full breadth of diversity available, whereas reinforcement learning (and the traditional startup method) optimizes for a single, best solution.

Rather than choosing between exploration and optimization, QD algorithms pursue both simultaneously through a sophisticated architecture that maintains and evolves a collection of diverse, high-performing solutions. As one researcher notes:

“the hope in QD is to uncover as many diverse behavioral niches as possible, but where each niche is represented by a candidate of the highest possible quality for that niche. That way, the result is a kind of illumination of the best of all the diverse possibilities that exist.”

This shift from searching for a single optimal solution to mapping landscapes of high-quality possibilities aligns perfectly with our needs in product discovery. Just as nature maintains diverse species adapted to different niches rather than converging on a single “best” organism, QD algorithms maintain diverse solutions adapted to different contexts and requirements. This approach recognizes that in product development, there rarely exists a single “best” solution – different users, contexts, and requirements demand different approaches.

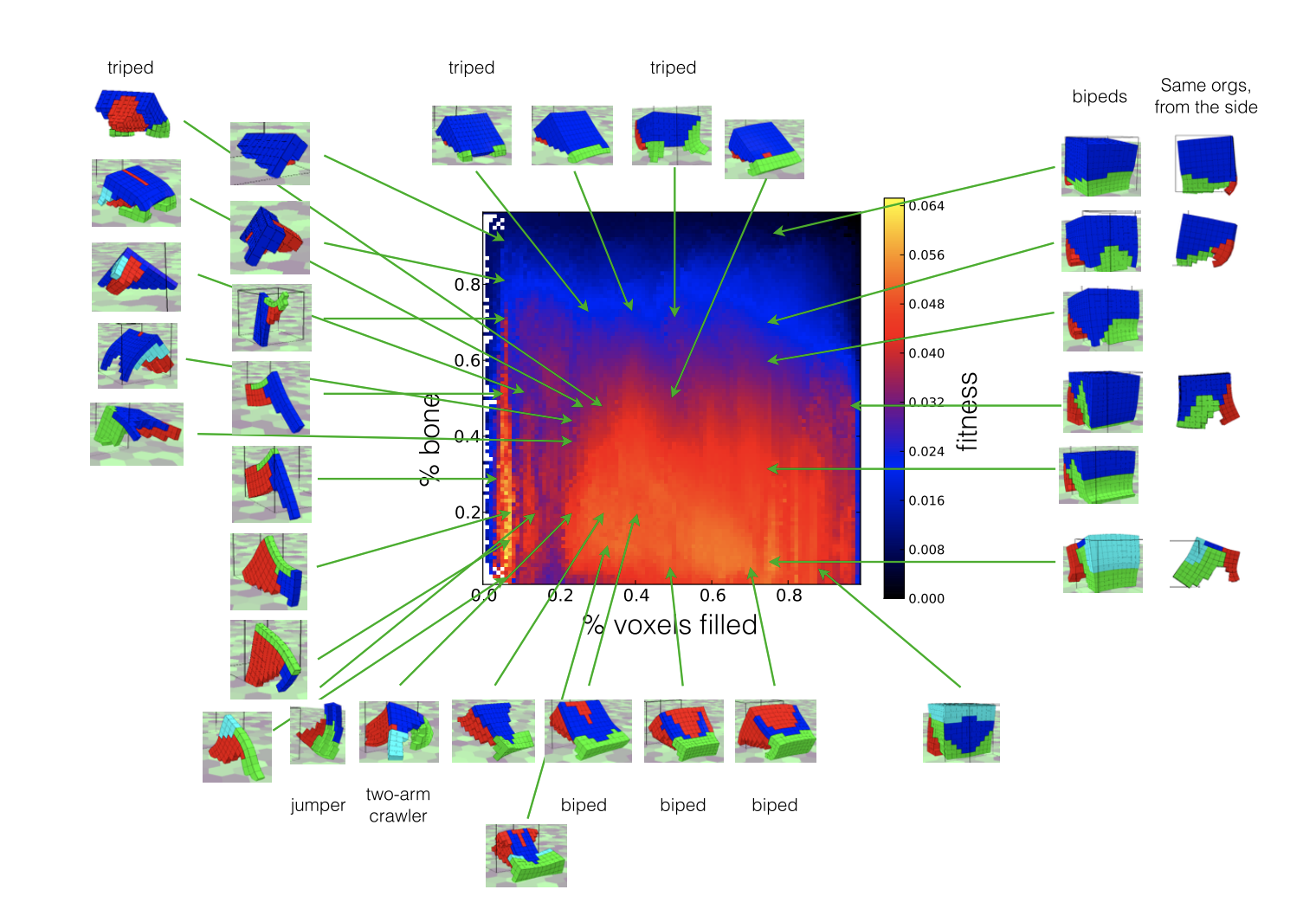

At the heart of QD lies a fundamental reframing of how we represent different solutions. Rather than evaluating products along single metrics of performance, QD maps them across "behavioral spaces" – multidimensional representations of how solutions actually function or behave.

Consider how we naturally think about products: we don't evaluate smartphones simply by processing speed, or apps purely by download numbers. Instead, we consider how they behave across multiple dimensions – user interaction patterns, resource usage, market fit, technical architecture.

QD formalizes this intuitive understanding. Each solution is mapped into a behavioral space defined by multiple dimensions we care about. For a mobile app, these dimensions might include interaction complexity, aesthetic feel, and user learning curve. For a business model, they might include customer acquisition cost, pricing model, and operational complexity. This mapping creates what amounts to a topographical map of the possibility space, revealing relationships and patterns that might be invisible in simpler metrics.

Traditional approaches to product development often fail because they attempt to reduce complex, multidimensional challenges to simple optimization problems. Real product and business opportunities exist in spaces defined by intricate interactions between user needs, technical capabilities, market dynamics, and resource constraints. QD’s behavioral space mapping naturally accommodates this complexity, allowing products to be evaluated and evolved across all relevant dimensions simultaneously.

This capability proves particularly powerful when dealing with what researchers call “complex generative systems” – spaces in which valuable solutions might exist in any number of configurations and combinations. As one study notes, “Rather than a single optimum or best design, such systems may have many designs that the designer would consider worthwhile.” QD’s ability to maintain and improve multiple solutions simultaneously means that promising but unconventional approaches have time to develop and prove their value, rather than being prematurely discarded.

Quality Diversity in Action

Let’s shift this from the conceptual back to the concrete. We begin just as the potentially doomed startup began - with the seed of an idea. Whereas the traditional startup takes that seed, commits to it, and starts building, we use that seed as, well, a seed - a starting point for our QD-powered discovery engine to begin its process. That engine, which we call Kensho, takes the initial idea and systematically mutates and evolves it until we’ve built a full archive of highly differentiated, high quality potential solutions:

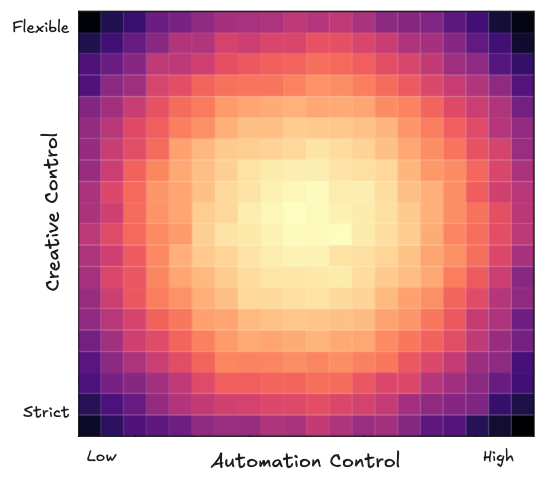

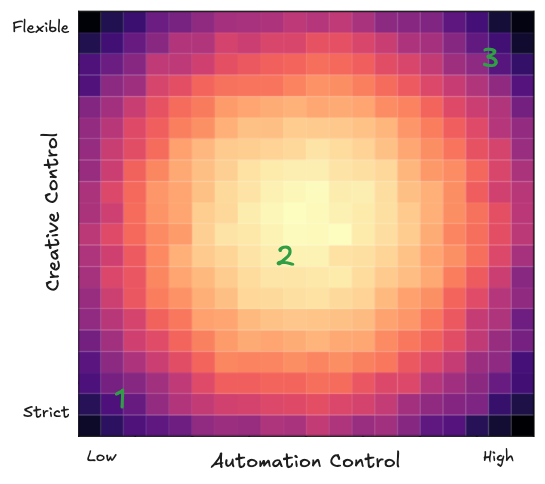

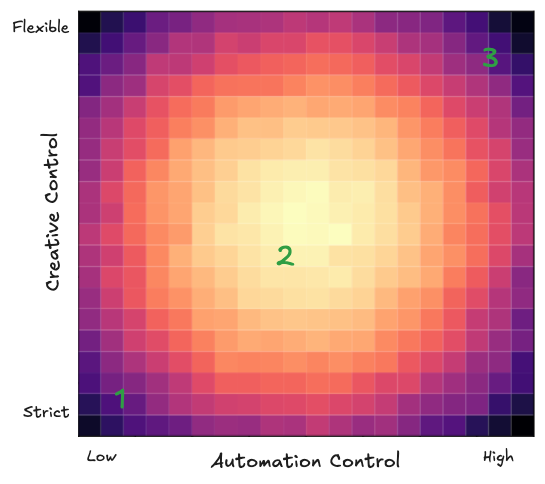

The final output is a solution portfolio, here consisting of 20×20 grid containing up to 400 distinct, high-quality solutions organized by their behavior characteristics

Now, the above archive doesn’t look like much yet, so let’s build out the details. First, it’s important to point out that every one of the above cells represents a distinctive potential solution for Seurat:

The current portfolio is a two-dimensional space that requires two variables for our axes. We can ultimately choose whatever we want here - and in reality we would choose multiple variations of these two variables to provide a more thorough understanding of the solutions space - but here we’ve selected to output solutions comparing their automation levels and creative control:

- X-axis: Automation Level (Low to High) - how much the solution automates versus augments human judgment

- Y-axis: Creative Control (Strict to Flexible) - how tightly the solution enforces brand guidelines and creative standards

Automation level and creative control provide us with measures of differentiation, but we also want solutions that are high quality, which we again can measure multiple ways, but let’s deploy a five-dimensional quality vector here:

- Problem-Solution Fit (0-10): How well does the solution addresses the core JTBD? Does it help Creative Directors maintain quality without constant involvement? Does it address the specific pain points identified in research?

- Implementation Feasibility (0-10): How realistic the solution is to build, in terms of technical complexity, required resources, and timeline to build?

- User Adoption Likelihood (0-10): What is the probability of acceptance by Creative Directors? How well does it align with existing workflows? What is the learning curve and perceived value?

- Differentiation (0-10): How unique is the solution compared to existing approaches? What novel capabilities or unique combinations of features does it offer? Does it address often overlooked aspects of the problem?

- Scalability (0-10): What is the potential to grow with user needs? Can it handle multiple projects, support growing teams, and expand capabilities over time?

Solutions scoring below a threshold (e.g., 6.0) would be considered low quality and would need improvement or replacement, and though the scoring process itself requires its own analytics workflow, we’ll spare you those details here.

What makes this approach so valuable but also feasible today is the massive advancements made in LLMs. Because they have ingested the entire corpus of human knowledge as manifested on the open internet, LLMs have proven to be incredible partners for our QD approach - able to operate the mutation and evolution process itself, automatically score solutions, discard solutions when better ones emerge. The LLM (or more broadly the foundation model) acts as our “discovery operator” for this approach.

This evaluation challenge creates a fundamental tension: our approach demands testing hundreds of distinct solutions to maximize our chance of discovering breakthrough value, yet traditional methods would require us to build each solution before testing—an impossible task given resource constraints. We need a systematic way to evaluate our full solution archive before committing significant resources to development.

Simulating Discovery

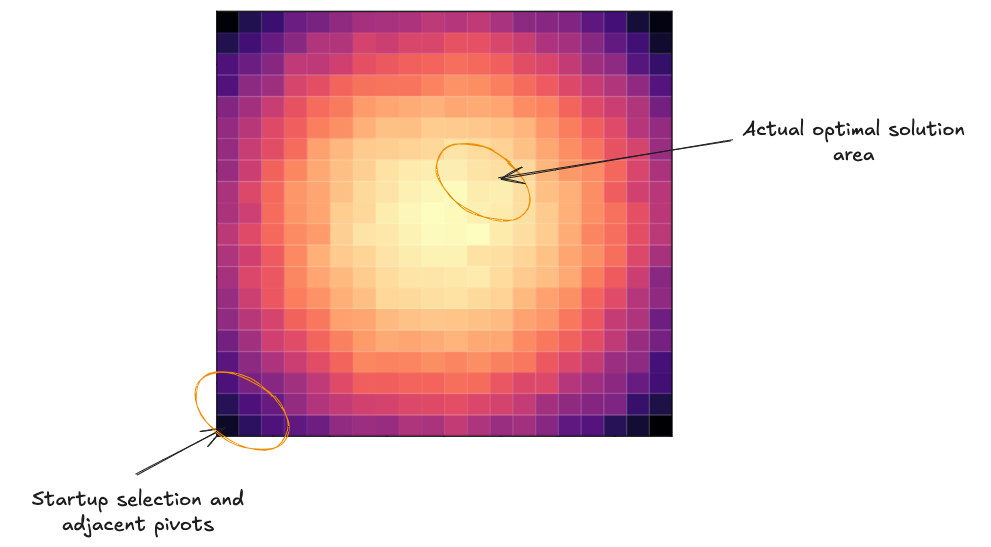

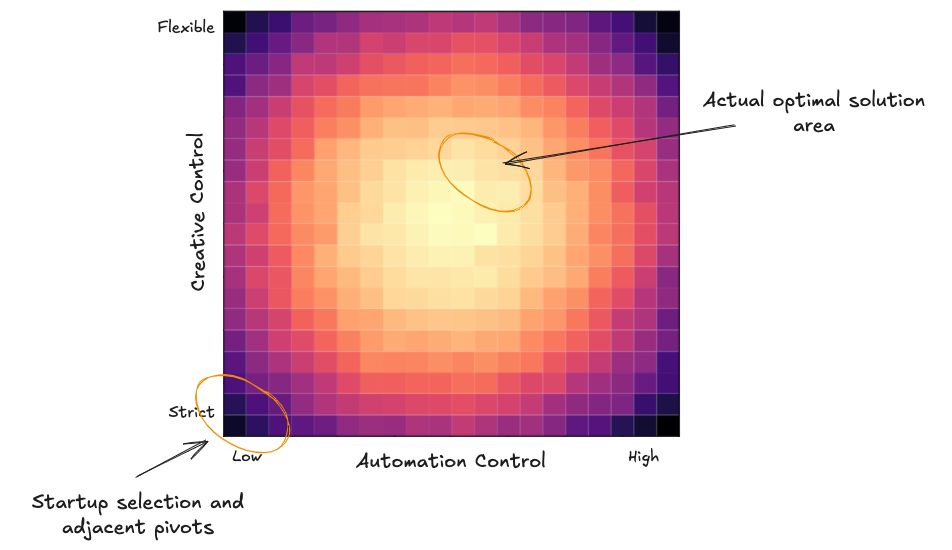

When faced with 400 potential solutions, a conventional startup would typically select just one or two concepts to prototype and discard the rest - a premature commitment that dramatically reduces their chances of discovering breakthrough value. Let’s assume that the optimal (and perhaps only viable) solution is here:

The startup might unwittingly and all-too-confidently have selected a solution...sub-optimally. There’s no iterating their way to the promised land here because the correct solution is a completely different species. This traditional approach creates artificial constraints on exploration and inevitably leads to the 90% failure rate we observe. Even with the best intentions, when a startup puts a single product in front of users and asks for feedback, they're setting themselves up for failure. Without comparative options, users will offer incremental improvements rather than fundamental critiques, potentially leading teams to polish products nobody actually wants.

Even if the team wanted to be more thorough, though, traditional methods simply won’t cut it. Building and testing even a small fraction of our solution archive would require months of development time and millions in investment (more on this later). This testing challenge isn't unique to product development. Other industries with far higher-stakes outcomes have developed sophisticated approaches to address similar constraints. Pharmaceutical companies have relied on computer-aided drug design (CADD) for decades, using molecular modeling to evaluate thousands of potential compounds before synthesizing a single molecule. Aerospace manufacturers employ sophisticated computational fluid dynamics simulations to test wing designs far before building physical prototypes.

In both cases, organizations recognized that the traditional "build-then-test" approach created artificial constraints on exploration, forcing premature convergence to potentially suboptimal solutions. Because the nature of most software development isn’t “life and death” in the same manner as these other industries, and because software is relatively cheap to develop, the industry has mostly ignored alternative testing methods. But with AI now enabling one-shot prototyping solutions that are rapidly driving prototyping costs to zero, the profligate wastes of the 90% startup failure rates should no longer be tolerated.

By simulating how increasingly complex solutions perform across various scenarios, we can identify critical flaws and unexpected strengths that would remain hidden through traditional approaches - all before writing a single line of production code. We should not only increase our success rate but also the total volume and quality of our products - solving many more problems more effectively than at any time in history.

Constructing a Discovery Simulator

We’re not building a protein folding or airflow simulator (at least not yet), so our flavor of simulation is a bit different - a systematic process that digitally models how diverse users would interact with and respond to our potential solutions before writing a single line of production code. Rather than building physical prototypes of each solution, we create virtual environments where solution concepts can be rigorously evaluated through simulated interactions that mirror real-world usage patterns.

This simulation system consists of three integrated components to begin with:

- Simulants: AI-powered digital representatives of users with diverse characteristics and preferences

- Facilitated Interactions: Structured conversations that model how different user types would engage with solutions

- Pattern Recognition: Analytical frameworks that identify meaningful signals across thousands of interactions

By orchestrating these components into a coherent system, we can test hundreds of solution variants simultaneously, pulling forward learning that would traditionally take months or years to acquire. Our simulation approach recognizes the complementary relationship between digital modeling and human testing: simulation excels at comprehensive logical exploration across vast possibility spaces, while human testing provides irreplaceable insight into experiential dimensions and emotional responses.

Simulant Creation

Traditional persona-building is one of product development's most persistently hollow rituals. Carefully crafting detailed customer archetypes with fictional names, hobbies, and demographic profiles creates an illusion of user understanding without actually enhancing product decisions. Even the notion of an "ideal customer profile" (ICP) fails to capture the fundamental reality that any product must serve a diverse distribution of users with varied needs and interaction patterns.

For simulation to yield meaningful insights, we must instead focus exclusively on dimensions that directly impact product interaction patterns while deliberately generating varied characteristic combinations that reveal the full distribution of potential responses.

Simulants (simulated users) are entities designed not to mimic specific individuals but to represent distinct behavioral clusters within our target user space - to simulate mass behavioral diversity. They are built on foundation models fine-tuned with industry-specific data and calibrated against actual Creative Director behavior patterns, allowing them to exhibit realistic variation across our defined characteristic dimensions while maintaining consistent internal logic in their feedback.

Our earlier baseline observations of Creative Directors' current workflows - their improvised feedback systems, ad hoc documentation methods, manual consistency checking, and status tracking workarounds - directly inform simulant creation here. These observed patterns reveal not just pain points but specific behavioral adaptations that our simulants attempt to accurately represent. By encoding these observed behaviors into our simulant characteristics, we ensure they respond to solution variants with the same practical concerns and adaptive mindsets we witnessed in real Creative Directors.

As such, Simulant characteristics include only those dimensions directly relevant to how they might evaluate and interact with our solutions:

- Decision-making style: The spectrum from data-driven analytical approaches to intuition-led aesthetic judgment

- Team management approach: Variations in directing versus delegating creative work

- Aesthetic sensibility: Preferences ranging from minimalist clarity to maximalist expression

- Technical background: The extent of technical understanding that shapes feature expectations

- Industry experience: How varied exposure to different creative contexts influences evaluation

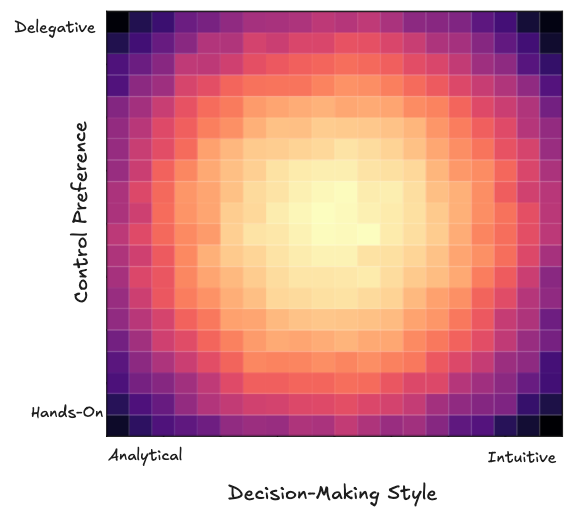

Rather than generating a single archetype, we apply quality-diversity algorithms to create a comprehensive archive of simulants that vary systematically across two primary dimensions:

- X-axis: Decision-Making Style (Analytical to Intuitive) At the analytical end, Creative Directors who prioritize metrics, systematic evaluation processes, and explicit design rationales. At the intuitive end, those who primarily trust aesthetic sensibility, holistic impression, and emotional response.

- Y-axis: Control Preference (Hands-on to Delegative) At the hands-on end, Creative Directors who prefer direct involvement in creative decisions at multiple levels. At the delegative end, those who focus on setting vision and direction while empowering team autonomy.

And we assess the quality of these generated simulants across five key dimensions:

- Profile Coherence (0-10): How logically consistent the simulant's characteristic combination is with real-world patterns

- Domain Knowledge Accuracy (0-10): The precision of the simulant's understanding of creative direction terminology, workflows, and constraints

- Characteristic Distinctiveness (0-10): How clearly differentiated this simulant is from others in our archive

- Attribute Balance (0-10): The realism of the simulant's mixture of strengths and limitations

- Behavioral Range (0-10): The breadth of response patterns the simulant can generate based on its profile

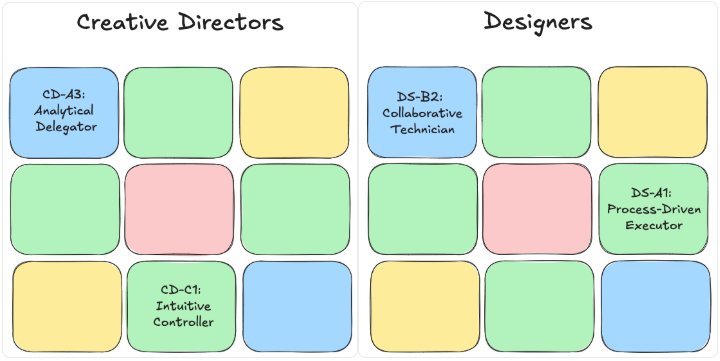

Just as with our solution variants, simulants scoring below threshold (e.g., 6.0) on these dimensions would require refinement or replacement to ensure the quality of our simulated feedback. For Seurat, we generate a 3×3 archive for each position (Creative Director and Designer), providing us with 9 distinct personas for each role.

By pairing each Creative Director simulant with each Designer simulant in facilitated conversations, we create 45 unique interaction combinations for comprehensive testing. This diversity allows us to systematically map how different user characteristics might influence solution evaluation without relying on the false precision of traditional personas.To provide some basic color on how these simulant personas might differ:

- Creative Directors: The CD-A3 approaches creative oversight through systematic frameworks and clear success metrics, preferring to establish measurable objectives and evaluation criteria rather than prescribing specific design choices. In contrast, the CD-C1 evaluates creative work through immediate aesthetic response rather than explicit criteria, trusting their refined sensibility developed through years of experience.

- Designers: The DS-B2 balances analytical and intuitive approaches, comfortable both with systematic design processes and creative exploration but also appreciating having space to explore solutions within established parameters. In contrast, the DS-A1 approaches design as a systematic process with explicit rules and best practices, preferring to work within clearly defined constraints and explicit direction rather than open-ended exploration.

The simulant archive serves not as an end itself but as the foundation for our next phase: systematically collecting feedback on our solution variants at a scale impossible through traditional methods.

Simulating Solution Feedback at Scale

The traditional approach to human interviews and usability testing is to hand-craft a discrete set of questions based on a narrow area of focus. There is little data available about what might be most effective for exploration within user testing, and so testers are forced to rely primarily on intuition rather than evidence. In the process, they potentially (and often do) miss transformative opportunities that lie outside of their narrow assumptions purview.

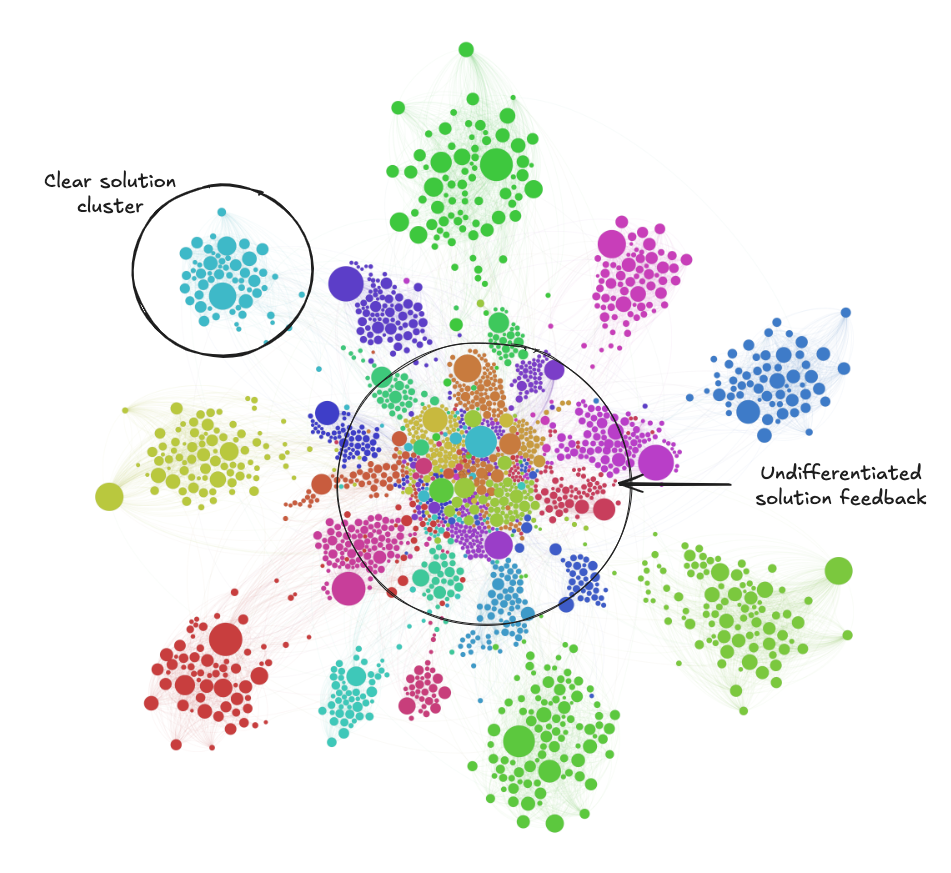

Our simulant-powered approach fundamentally inverts this dynamic. With our creative director and designer simulants now created, we can generate 45 distinct sets of interactions for each of our 400 solutions - creating frankly ridiculously-sized feedback archive of 18,000 distinct solution conversations. Rather than hand-craft the solutions and specific details we’ll place in front of our human testers in the next phase, we instead use this 18,000 conversation archive to systematically extract the features that appear most promising.

This is a “curriculum-based approach” to feature discovery - we allow the best options to naturally emerge from our automated exploration of the entire solution space, rather than artificially narrow to what our human brains allow. By systematically exploring the entire solution space before human engagement, we transform solution selection from an intuition-driven exercise to an evidence-based decision firmly grounded in comprehensive data.

Pattern Recognition and Emergence

Rather than simply generating isolated feedback from individual simulants, we instead structure dynamic conversations between three different simulants - a Facilitator simulant guides the conversation between a Creative Director and a Designer simulant variant - mirroring the collaborative feedback processes that characterize real-world creative environments.

For each solution of the 400 solutions in the archive, these facilitated conversations unfold across 45 distinct simulant pairings, systematically exploring how different characteristic combinations influence solution perception. With thousands of simulated conversations generated, we shift from conversation generation to pattern recognition - identifying meaningful signals amid the noise of individual preferences.

Large-scale simulated feedback enables a different epistemological approach to understanding solution value. Rather than treating each piece of feedback as an isolated data point, we analyze patterns across multiple dimensions:

- Persistence patterns: Feedback that consistently emerges across diverse simulant profiles carries more weight than isolated observations

- Correlation patterns: Relationships between simulant characteristics and specific solution preferences reveal how different user types might respond

- Interaction patterns: How creative directors and designers align or diverge in their assessment of specific features reveals potential organizational dynamics

This multi-dimensional analysis allows us to better distinguish between statistical artifacts and meaningful relationships. When a particular strength or concern appears consistently across diverse simulant profiles, it likely represents a fundamental attribute of the solution rather than an idiosyncratic reaction. Conversely, when feedback strongly correlates with specific simulant characteristics, it reveals how the solution might perform differently across various user segments.

Pattern Emergence Example

Creative directors with analytical decision-making styles initially express strong interest in automated feedback features, while those with intuitive styles express skepticism. However, when these features are discussed in the context of routine versus creative decisions, a more nuanced pattern appears.

Approximately 75% of all creative director simulants, regardless of decision-making style, express positive sentiment toward automation for technical compliance checking (brand colors, logo usage, sizing), while 83% express preference for human judgment on conceptual elements (message impact, emotional resonance, creative direction). This pattern persists across different team size contexts and management approaches, suggesting a fundamental distinction between where automation is valued versus where human judgment is considered irreplaceable.

This insight reveals a potential design principle that would have been difficult to identify through traditional small-sample testing: automate the technical while augmenting the creative.

From Simulation to Selection

The ultimate purpose of this massive-scale simulation isn't comprehensive documentation but effective filtration - identifying which solution features demonstrate the strongest potential for creating user value across diverse contexts. This pattern recognition transforms our initial 400-solution archive into specific feature combinations that merit tangible manifestation in prototypes. Rather than determining what to ask humans, we determine what to build for humans, focusing our limited prototyping resources on the most promising solution elements.

Consider just how different this is from traditional hand-crafted approaches. Rather than relying on subjective human judgment to determine which solutions to test, we leverage empirical patterns from thousands of simulated interactions. Solutions that consistently generate positive feedback across diverse simulant profiles rise to the top, while those that demonstrate fundamental flaws across multiple dimensions are filtered out.

More importantly, solutions that reveal distinctive strengths in different regions of the characteristic space emerge as high-contrast candidates for human testing.

Humans provide more meaningful feedback when comparing distinctly different options rather than similar variations. When we look at our 400-solution archive, we understand that the optimal solution might exist anywhere within this space, but because we can’t actually know ahead of time where the optimal solution exists - or even if it exists - we need to sample multiple times and select for high contrast options:

We deliberately select solutions from widely separated regions of our archive - ones that represent meaningfully different approaches to the same underlying JTBD. The goal isn't simply to identify the "best" solutions according to some aggregate metric, but to select solutions that offer meaningfully different approaches to the same problem - enabling more effective learning through comparison. Let’s reiterate this - the goal of simulation-reality mapping is not solution selection but maximizing learning about solution features.

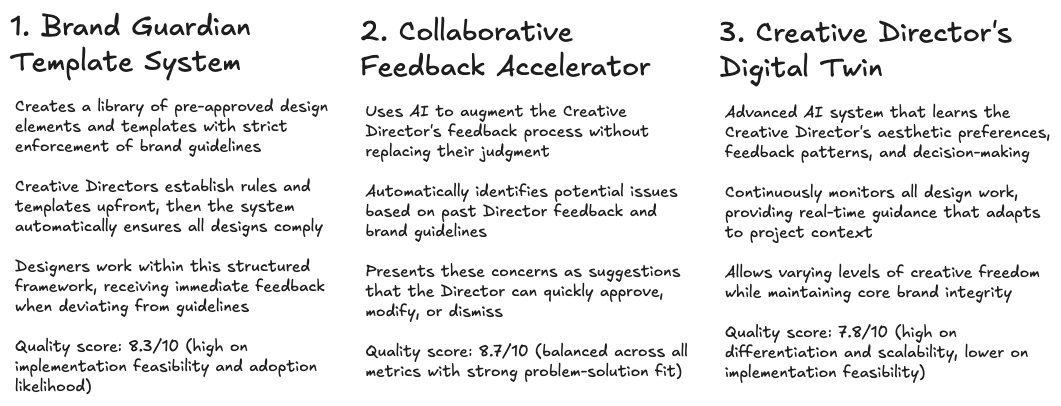

From this process, we identify three distinct high-contrast solution variants for initial human exploration:

Each represents a fundamentally different approach to the creative director's core job-to-be-done, offering distinctive value propositions that address the problem from different angles. By selecting these high-contrast variants, we maximize the learning potential of our subsequent human testing phase.

The insights generated through large-scale simulation simply cannot replace human feedback - but they can dramatically enhance its effectiveness. By systematically exploring the solution space before human engagement, we can ask better questions, focus on more meaningful comparisons, and extract deeper insights from limited human testing sessions. Rather than asking humans to help us identify what matters, we instead ask them to validate or challenge the patterns that emerged from simulation.

Prototyping: From Simulation to Tangible Experience

In traditional prototype development, a team selects a single concept, invests weeks/months building it, then places this solitary artifact before users, desperately hoping for validation rather than genuine feedback. Having invested substantially in a single direction, teams unconsciously filter feedback, amplifying positive signals while downplaying fundamental flaws. Even users participate in this charade, offering incremental improvement suggestions rather than stating the uncomfortable truth: the core concept misses the mark entirely. This ritual wastes precious resources on solutions that should never have reached the prototype stage.

Our approach inverts this dynamic entirely. Simulation provides unprecedented breadth of exploration, but evidence from cognitive science repeatedly demonstrates that humans struggle mightily to evaluate abstract concepts through imagination alone. What people say they want diverges dramatically from how they actually respond to tangible manifestations. Revealed preferences consistently outperform stated intentions as predictors of actual behavior.

This epistemological reality drives our transition from simulation to prototyping. While simulation excels at mapping logical relationships and function-based needs, humans excel at evaluating forms - the tangible, experiential, and emotional dimensions that remain largely inaccessible to our simulants. The prototyping phase thus serves three critical functions:

- Transforming abstract solution concepts into tangible experiences that reveal rather than rely on stated preferences

- Exposing the emergent properties of feature combinations that might not be apparent in isolation

- Providing critical calibration data that improves future simulation accuracy

By front-loading our exploration in simulation, we ensure that each prototype represents a high-potential region of the solution space worthy of investment. Rather than betting everything on a single concept, we develop multiple distinct prototypes that represent fundamentally different approaches, maximizing the learning value of every user interaction.

Prototype Generation Strategy

With simulation-derived patterns indicating promising feature combinations and current tool baselines establishing concrete reference points, we transition to actual prototype development. We’ve used this term prototype multiple times to this point, but what specifically we we mean by it? We previously posited that all technology is a recombination of core primitives, so our prototypes are initially focused on these primitives.

The primary goal in this phase is velocity - we can't know with certainty what will work, so we need to generate and test variations quickly and at scale. Our prototype development differs fundamentally from traditional approaches in three key ways:

- Component-driven architecture: Rather than building monolithic solutions, we develop modular components that can be recombined based on testing feedback

- Throwaway code: We prioritize speed over code quality, recognizing that none of this code will persist into production

- Variant diversity: For each key component, we develop multiple implementations that represent meaningfully different approaches

Based on the patterns identified in our simulant conversations and the baseline insights from current tool observation, our discovery pod identifies the core components that appear most crucial for initial prototyping, which in our specific case here might include:

- Brand consistency checking mechanisms with varying degrees of automation

- Feedback collection interfaces with different prioritization approaches

- Team collaboration spaces with various visibility and permission structures

Our earlier baseline observations of Creative Directors' workflows provided foundational insights into their challenges - improvised feedback systems, ad hoc documentation, manual consistency checking. These initial tool observations informed our simulant development, which led to comprehensive solution exploration, which in turn generated patterns that now guide our prototype priorities.

For each component, we generate multiple implementation variants that represent distinctly different approaches rather than minor variations. This deliberate diversity enables more effective learning through comparison - users can more easily articulate preferences when presented with meaningful contrasts:

Human Testing Framework

With our diverse set of component-level prototypes in hand, we re-engage with our collection of human Creative Directors and designers to gather tangible prototype feedback, focused on three key dimensions:

- Functional efficacy: How effectively does the component address the underlying job-to-be-done?

- Experiential quality: How does interaction with the component feel to the user?

- Integration potential: How might this component function within a broader system?

Unlike traditional usability testing, which most often follows rigid scripts, we use simulant interviewers to facilitate natural interaction between the humans and prototypes. Simulants allow us to maintain consistent coverage across all testers while enabling a far more dynamic and flexible environment for human testers - ensuring that we cover all of the areas we a priori decide are important while enabling unexpected insights to emerge. The feedback gathered during these sessions contains crucial information we simply couldn’t access through simulation alone:

- Emotional responses to different interaction patterns

- Intuitive understanding versus cognitive effort required

- Unexpected use cases and adaptation potential

- Subtle quality perceptions that defy explicit articulation

The insights gathered through these human testing sessions create rich feedback that complements and calibrates our simulation findings. Where simulants excel at logical assessment and systematic evaluation, human testers provide invaluable insight into emotional responses, intuitive understanding, and quality perceptions that often defy explicit articulation.

These testing sessions transform our understanding of how different elements might work together in a complete product. Components that consistently resonate with human testers become candidates for integration into more comprehensive prototypes, while those that reveal fundamental flaws are either redesigned or discarded.

Product Synthesis

Our extensive prototype testing - from component-level experiments to increasingly comprehensive implementations - generates an extraordinary volume of empirical data that requires synthesis, integrating these diverse learnings into coherent product concepts that address the core job-to-be-done of Seurat. Unlike traditional approaches that frame synthesis as selecting a "winning" design from a small set of alternatives, synthesis instead draws from our rich archive of prototype feedback to identify patterns, combinations, and integrations that might never have emerged through linear development. The optimal solution rarely will exist fully-formed in our initial testing but emerges through systematic recombination of successful elements from across our testing archive.

We begin with pattern identification - examining user responses across all prototype variations to identify which capabilities consistently create value regardless of implementation details. For Seurat, several patterns emerged with striking consistency:

- Automated technical compliance checking (brand colors, spacing, asset usage) consistently received positive feedback across nearly all user profiles, with most Creative Directors explicitly noting the time savings these features would provide.

- Though there continued to be skepticism that the “Creative Director digital twin” would replace many of the human-human feedback sessions, there was distinct optimism that it would fundamentally improve development times for conceptual or aesthetic decisions.

- Visual annotation capabilities, particularly those that maintained persistent connections to design elements rather than static screenshots, emerged as unexpectedly valuable across multiple implementations.

These patterns reveal not just which features matter, but why they matter - providing crucial context for synthesizing complete product concepts rather than mere feature lists.

From here we move from pattern recognition to concept integration - combining successful elements into coherent wholes. Rather than simply aggregating popular features, this integration considers how different components interact as systems. For instance: the relationship between automated compliance checking and creative direction tools; the connection between annotation capabilities and feedback workflows; the interplay between brand guideline enforcement and designer autonomy.

This systemic perspective reveals emergent properties that might be invisible when evaluating components in isolation. When combining Seurat's automated checking with the digital twin, we discovered that the resulting workflow created not just the expected time savings but fundamentally transformed how Creative Directors approached quality control - shifting from reactive correction to proactive guidance.

Depending on the patterns identified and their integration potential, several outcomes are possible here:

- Single-Solution Synthesis: One integrated product concept emerges that comprehensively addresses the core job-to-be-done with a coherent implementation approach.

- Multi-Solution Synthesis: Multiple distinct product concepts emerge, each addressing the job-to-be-done through fundamentally different approaches that might serve different user segments or contexts.

- Partial-Solution Synthesis: While valuable components emerge, no complete solution yet demonstrates sufficient value to warrant advancement, indicating the need for additional discovery.

For Seurat, our synthesis yielded a single primary product concept that integrated automated compliance checking with a persistent creative director digital twin and persistent visual annotations, ensuring consistent quality and brand alignment without requiring continuous direct involvement in every creative asset. However, a great product alone doesn't create a sustainable business. To build something truly enduring, we need to extend our systematic approach beyond product design and into commercial discovery - understanding not just what to build, but how to monetize it effectively if we bring it to market.

Scaling and Compounding

The most revealing test of one’s innovation capability isn't creating a single successful product - it's building the second, fifth, or twentieth. Even amongst the 10% of startups that achieve initial success, the vast majority never launch another significant product. This unfortunate reality reveals two foundational challenges inherent within current approaches:

- Most companies treat innovation as an unrepeatable event rather than a systematic process. They lack methodologies that can be deployed consistently across multiple opportunities. Initial success builds resources, especially financial, but not capability - creating the paradoxical situation in which innovation capacity diminishes as investment capacity increases.

- Traditional approaches fail to improve with repetition. Each innovation cycle starts from approximately the same capability level as the previous one. There's no systematic mechanism to ensure that knowledge gained in one cycle enhances the effectiveness of future cycles.

Our development of systematic combinatorial discovery addresses both challenges by creating a framework that is both inherently scalable across multiple dimensions and designed to improve with each cycle.

Scaling: Systematic Repeatability

Unlike traditional approaches that rely on unrepeatable factors like founder intuition or market timing, our systematic combinatorial discovery methodology can be deployed consistently across opportunities, domains, and technological substrates. This inherent repeatability transforms innovation from unpredictable events to reliable systems.

Vertical Scaling: Domain Depth

Vertical scaling refers to our ability to systematically explore multiple opportunities within a single domain. For most companies, initial success in a domain rarely translates to systematic exploration of the entire domain opportunity space. They typically pursue the most obvious adjacent opportunities only, leaving vast territories unexplored.

Consider instead our initial mapping of the adtech opportunity space. We didn't identify Seurat as the sole promising direction but the first of a comprehensive archive of dozens of potential businesses targeting unmet Jobs To Be Done across the creative development lifecycle:

Beyond Seurat's focus on Creative Director workflow enhancement, our opportunity mapping already revealed unmet needs in AI-powered competitive analysis for campaign effectiveness and dynamic creative optimization for cross-platform campaigns. Each represents a distinct business opportunity with significant market potential that we can pursue with the same systematic rigor:

- Validation through systematic exploration

- Solution generation using quality-diversity algorithms

- Validation through simulation-reality mapping

- Commercial verification through empirical testing

Where traditional companies might pursue 2-3 related opportunities within a domain based on intuition or market trends, our systematic approach enables us to more comprehensively explore 20-30 within the same timeframe.

Unlike traditional companies that might try to pick off a couple of the most obvious opportunities (that are also likely to attract the greatest competitive ferocity), we systematically map the entire possibility space, including opportunities that traditional approaches would likely overlook.

Horizontal Scaling: Domain Breadth

We’re not interested in an adtech platform, of course. Our ambitions should extend far beyond this singular domain, to fintech, government, healthcare, education, and beyond, and systematic combinatorial discovery similarly ignores artificial boundaries between these domains. Traditional companies attempting to scale horizontally face a fundamental limitation: each new domain requires building domain expertise from scratch, often through expensive acquisitions or high-risk executive hires.

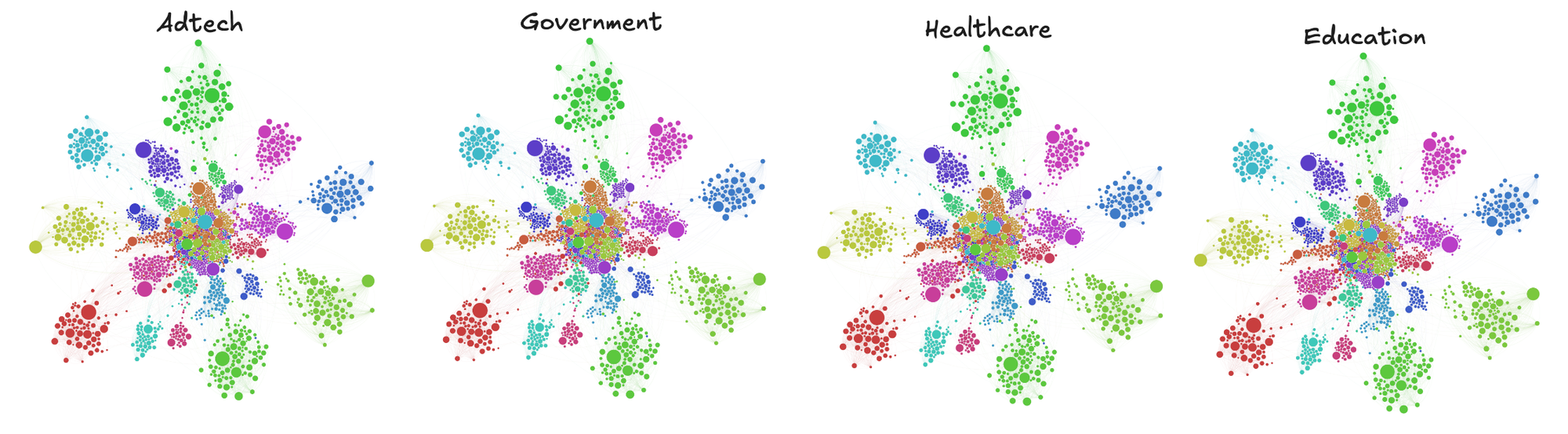

We don’t have the same problem. The same approach that identified Seurat in adtech can be applied to identify unmet needs in any market:

If we identify 30 promising opportunities in each of 20 different domains, we establish a pipeline of 600 potential businesses - each with clearer validation than most venture-backed startups achieve before significant investment.

Substrate Expansion: Beyond Software

We are intentionally pursuing software-only businesses at the start - not for any philosophical reasons, but because software businesses are (typically) far cheaper to build and, more importantly, far faster. The higher the velocity, the tighter the feedback loops, the faster we’ll learn and our systems will evolve. But our ambition is not to remain ensconced within the digital realm forever - there are so many more problems to solve and businesses to build if we expand our approach into additional substrates - into hardware and wetware.

These substrates present far greater challenges - higher capital requirements, longer development cycles, and more complex validation needs - but by first establishing and hardening our systems, we’re quite confident that the same systematic combinatorial discovery approach will translate from software into these new substrates:

- Quality-diversity algorithms work equally well for exploring hardware configurations as for software features

- Simulation-reality mapping becomes even more valuable when physical prototyping is expensive

- Opportunity mapping through JTBD research remains equally effective regardless of technical implementation

The opportunities here are tremendous. Consider DeepMind's AlphaFold as an illustrative example of how systematic approaches transform innovation in complex domains. Traditional protein structure mapping had identified approximately 200,000 structures over decades of work. AlphaFold's systematic, algorithmic, simulation-heavy approach has already mapped 200 million in just a few years.

We can only hope that our systematic approach enables similar transformative potential across substrates. By establishing consistent methodologies that transcend specific technological implementations, we create innovation capacity that can expand into domains traditional approaches systematically avoid due to capital intensity or development timelines.

Compounding: Accelerating Improvement

A repeatable, scalable architecture is, by itself, a huge net positive over the standard one-and-done model, but such repeatability would admittedly be wasted if we did not learn how to systematically improve with each cycle, with each iteration. Traditional innovation approaches not only struggle with repeatability but demonstrate minimal improvement between cycles. A company's second product development effort typically begins with approximately the same capability level as their first, as any learning remains partial, informal, and often lost in transition between teams.

Let’s be clear - it is a choice to not seek out and develop specific mechanisms for compounding over time, and this is certainly not a choice we’re willing to make. As our primitive archives grow across domains, we develop increasing capacity to identify valuable cross-domain combinations that would remain invisible to traditional approaches.

- A workflow pattern discovered in creative automation might suggest novel approaches in financial software.

- A visualization technique from healthcare might transfer to educational products.

- An AI interaction model from one domain could inspire breakthroughs in another.

Traditional companies occasionally achieve such cross-domain insights through chance connections, but these remain unpredictable and unrepeatable. Our systematic archives and recombination mechanisms transform these accidental discoveries into methodical exploration.

This creates what complexity theorists call "adjacent possibles" - innovations that become visible only after certain preliminary discoveries. By systematically mapping and connecting these possibilities across domains, we create an innovation landscape that continuously expands and enriches with each discovery cycle. Each primitive added to our archives doesn't just incrementally enhance future discovery - it combines with existing primitives to create entirely new possibility spaces.